Will AI search prove to be the end of Google?

That’s one big question surrounding search, one that would have seemed inconceivable to anyone who had witnessed Google’s decades of dominance.

But over the last year, we’ve seen study after study hinting toward a fall, from one saying customers are happier with AI summaries in their results to another pointing to a genAI preference over traditional search engines. Google is still responsible for 87.3% of the total referral traffic among search engines, but the AI insurgence can’t be ignored. Even as Google has instilled its own AI Overviews near the top of its result pages, the question of Google’s future is a fair one to ask.

Of course, you’ll have to forgive publishers for looking a bit more inward, being a bit more concerned about their place in all of this, and asking other existential questions.

Just ask any entry-level chatbot and it’ll give you the generic diagnosis that the landscape of organic search and Google Ads is indeed rapidly changing, but those battles are at least familiar ones for publishers. A more-recent publisher-worry subsequent to this rise of AI is the issue of citation and attribution. When a publisher actually has an answer that an AI chatbot needn’t be forced to hallucinate, does that source get the deserved credit?

The Tow Center for Digital Journalism, following up on recent research it conducted on ChatGPT and its attribution and representation issues, spread its scope out to other generative search tools earlier this month, ultimately decreeing “they’re all bad at citing news.”

“Chatbots were generally bad at declining to answer questions they couldn’t answer accurately, offering incorrect or speculative answers instead,” writes Klaudia Jaźwińska and Aisvarya Chandrasekar in the Columbia Journalism Review. “Our findings were consistent with our previous study, proving that our observations are not just a ChatGPT problem, but rather recur across all the prominent generative search tools that we tested.”

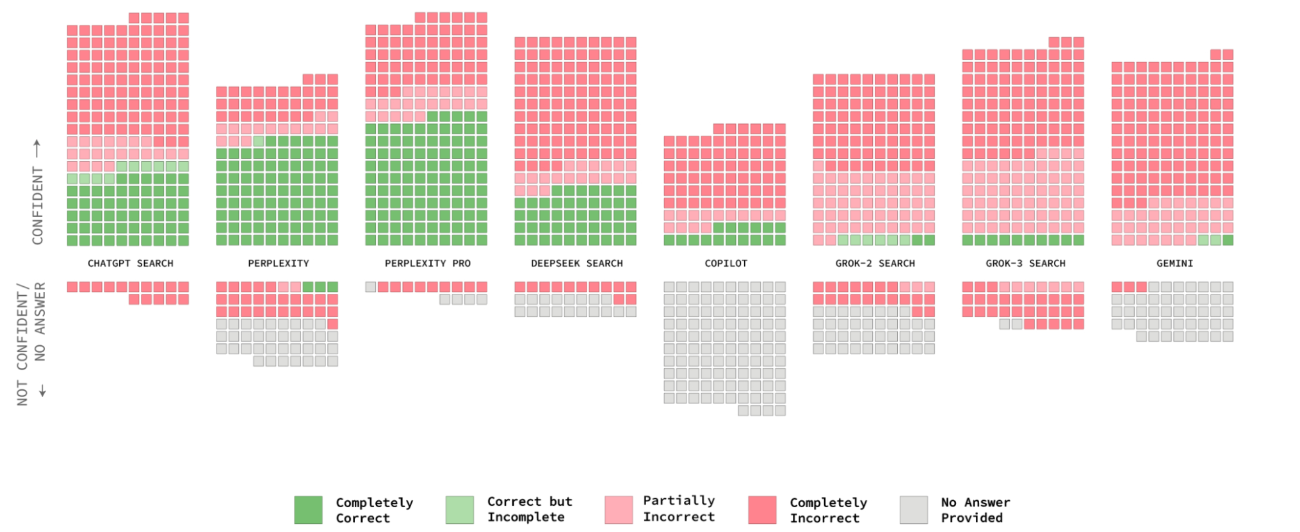

The research included giving eight leading generative search tools 200 excerpts from news articles from 20 publishers, chosen because they, “if pasted into a traditional Google search, returned the original source within the first three results.” From those excerpts, the chatbots were asked to identify the source article, the publication, and the URL; collectively, the research found, they kicked back incorrect answers to more than 60% of the queries.

(Source: Columbia Journalism Review)

“Overall, the chatbots often failed to retrieve the correct articles,” the research found. “Across different platforms, the level of inaccuracy varied, with Perplexity answering 37 percent of the queries incorrectly, while Grok 3 had a much higher error rate, answering 94 percent of the queries incorrectly.

The study found that most of the chatbots offered inaccurate answers “with alarming confidence, rarely using qualifying phrases such as ‘it appears,’ ‘it’s possible,’ ‘might,’ etc., or acknowledging knowledge gaps with statements like ‘I couldn’t locate the exact article.’” When it came to confidence, the study found that premium models — which it classified as the $20/month Perplexity Pro and the $40/month Grok 3 — were more “confidently incorrect” than other free chatbots.

“Our tests showed that while both answered more prompts correctly than their corresponding free equivalents, they paradoxically also demonstrated higher error rates,” the research says. “This contradiction stems primarily from their tendency to provide definitive, but wrong, answers rather than declining to answer the question directly. The fundamental concern extends beyond the chatbots’ factual errors to their authoritative conversational tone, which can make it difficult for users to distinguish between accurate and inaccurate information. This unearned confidence presents users with a potentially dangerous illusion of reliability and accuracy.”

To make matters truly infuriating, even licensing deals don’t seem to help citations. The research referenced OpenAI’s partnership with media groups, including what it counted as OpenAI’s 17th such news content licensing deal (with Guardian, in February),

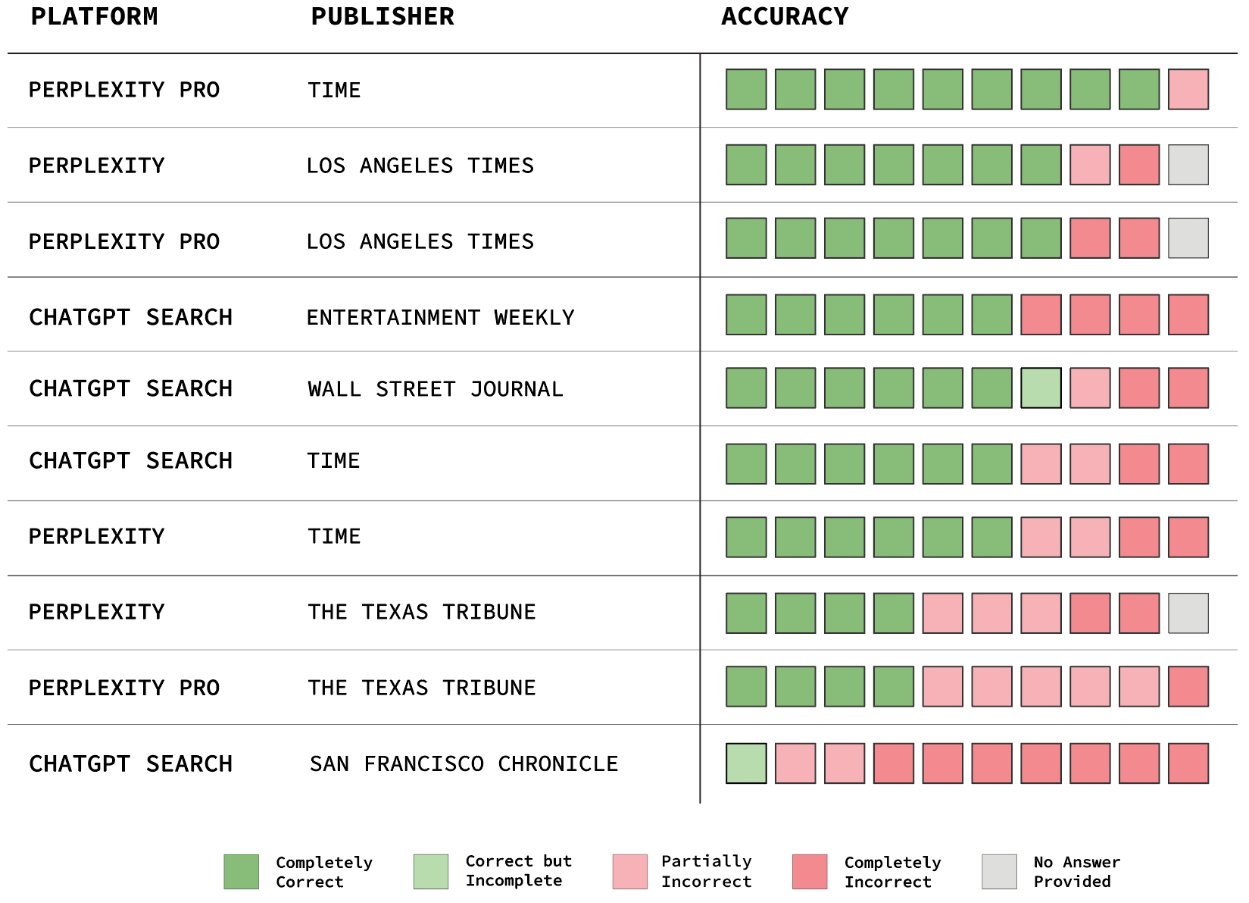

“Such deals,” the research says, “might raise the expectation that user queries related to content produced by partner publishers would yield more accurate results. However, this was not what we observed.”

(Source: Columbia Journalism Review)

Ten excerpts from the San Francisco Chronicle, for instance, were given to OpenAI, which has a “strategic content partnership” with Hearst. Only once did the chatbot identify an excerpt, and though it named the publisher in that instance, it didn’t provide the URL.

It puts last week’s proposal by the News/Media Alliance to the Trump Administration regarding responsible AI development in a unique light, considering much of that outline was regarding intellectual property, voluntary licensing, and “appropriate transparency.” Once that hill is finally conquered, will citation issues that are already seemingly ingrained simply be a lost cause?

As for the searchers among us, perhaps the simple advice from The Neuron newsletter — an AI-cheerleader if there ever was one — is all we can do.

“Take AI search results — especially news citations — with a massive grain of salt. Or better yet, do a traditional Google search to verify.”

SEE FOR YOURSELF

The Magazine Manager is a web-based CRM solution designed to help digital and print publishers manage sales, production, and marketing in a centralized platform.